Chronicle Detection As Code with Google IDX and GitHub

After recently reading the awesome two-part series by David French (part 1 and part 2) I decided to take his work for a spin. I identified some areas of frustration (no VSCode extension!), and wanting to use GitHub Actions throughout my workflow instead of GitLab.

If you haven’t already make sure to check out the repo for the work David did here, I will be utilizing it throughout this article, with a couple tweaks.

VSCode Extension

tl;dr I created a basic VSCode Extension for Syntax highlighting, you can view the source, send over Pull requests, and report any issues here. Make sure to install the settings.json file for the colors.

You can also view it on open-vsx, I’ll be pushing it to the Azure DevOps in the near future.

It is pretty basic, not doing any sanity checking or anything, but the IDE color matching to Chronicle’s built-in IDE is very close.

GitHub & Code Mod

The first thing I did was setup a repository in GitHub to start working with the rule_manager tool. I also made one adjustment to the code to ignore Archived rules (special thanks to Mike Wilusz for helping here). Personally, I don’t want Archived rules coming into the pipeline, so we ignore them.

Steps

- Grab the latest version of the rule_manager from https://github.com/chronicle/detection-rules/tree/main/tools/rule_manager

- Modify the rules.py file in the rules_cli directory line 123, and replace the current function with a new one

- Push this out to a Private repository on your GitHub (make sure it’s private!) — don’t upload your .env file either.

# Code snippet for line 123 in rules.py (make sure proper integration)

def parse_rules(cls, rules: Sequence[Mapping[str, Any]]) -> List[Rule]:

"""Parse a list of rules into a list of Rule objects."""

parsed_rules = []

for rule in rules:

if rule["deployment_state"].get("archived") is True:

print("Ignoring, archived.")

else:

parsed_rules.append(Rules.parse_rule(rule))

return parsed_rulesIDE, Build Environment, and LLM?

Once rule_manager is deployed to GitHub, you can bring it into your favorite IDE. VSCode may be the choice for many folks here, and that’s fine, go that route, not much will change beside you’ll be doing development locally on your machine.

For this though, I wanted to try something different, and test out Google’s latest Gemini models built into their Project IDX platform. IDX is built on VSCode and somewhat similar to replit in the tools available; it has a full IDE, and builds out a container for you to work from on NixOS hosted in GCP.

IDX Setup

Setup is pretty straight forward, there is an “Import a repo” option, it’ll pull your repository and prompt you to allow access since your repository is private (right?).

After my repository was completed, I forgot to put a README, so I asked it to generate one for me based on a simple input, seems good enough!

Next up is using the VSCode extension to prettify the Yara-L rules. Since this is VSCode under the covers, we’ll click on the extensions icon on the left bar (4th down). IDX utilizes open-vsx, so we can just search for Chronicle Yara-L there and grab the latest version (tied to my github userid the2dl), there is another one there under dansec from when I didn’t know what I was doing in open-vsx.

Once that’s installed, go ahead and update your settings “Open WorkSpace settings (JSON)” and drop in the settings from here.

Now that our extension and colors are good to go, let’s focus on getting our python virtual environment stood up and pulling all the rules into IDX (also a good time to put your .env file in place).

rule-manager-896652:~/rule_manager{main}$ cd ..

rule-manager-896652:~$ python -m venv ./rule_manager

rule-manager-896652:~$ source rule_manager/bin/activate

(rule_manager) rule-manager-896652:~$ ls

rule_manager

(rule_manager) rule-manager-896652:~$ cd rule_manager/

(rule_manager) rule-manager-896652:~/rule_manager{main}$ ls

bin chronicle_api CONTRIBUTING.md include lib lib64 LICENSE pyvenv.cfg README.md requirements_dev.txt requirements.txt rule_cli rule_config.yaml

(rule_manager) rule-manager-896652:~/rule_manager{main}$ pip install -r requirements.txt

.....all packages install.....

(rule_manager) rule-manager-896652:~/rule_manager{main}$ python -m rule_cli -h

19-Feb-24 23:13:34 UTC | INFO | <module> | Rule CLI started

.....continued.....Update your .gitignore file to ignore all the venv python stuff so it doesn’t get sync’d to your repository.

Synchronize the rules

For me, this synchronizes all the rules over, if you run into an error here remember to have your .env file in place for authentication and API endpoint locations. Once completed it will bring over all of your unarchived rules, which for me in my small tenant is 120 rules.

Now our rules have been imported into our IDE, it’s a good time to sync them to our repository for our base set of rules. For this initial sync let’s push it to our main branch, then create an additional branch called “devel” to mirror main. The purpose of the devel branch will be to push all your rule changes to, which then later get a pull request into main and run the workflow to push to Chronicle once completed approval.

Also note that in IDX it has some theme defaults that override some of my extensions styles (in particular green text), but it’s not terrible.

Modify, test, synchronize

So let’s put it to the test, I need to modify one of my current Chronicle rules. In this scenario we’ll modify the adfind_exec rule from above, and clean up the regex.

In the screenshot above, I started by updating the regex to the /content/ format, I prefer that over the other way that it was using. Then I thought, I wonder if we should search for any other flags, so I asked IDX AI and it provided some examples I didn’t cover, so I added them to my orregex.

Validate the rule syntax against Chronicle’s Yara-L checker and ship it to GitHub for review.

A note — Make sure to send it to the proper branch, which in our case is devel, so we can review it via a pull request in GitHub.

Pull it!

The next step is to create a pull request of the change, click the “Create pull request” to initiate this process.

From here we would have a secondary team member verify it and merge the pull. Since I’m the only one in my GitHub org, it’ll be me verifying my own work.

Github Actions — Build & Deploy via Pull

Once it’s ready to get pushed off to your Chronicle instance, you have multiple options on what the next steps are, but to follow what we’re doing here this is the lifecycle we have been following

The last piece to talk about is the workflows in GitHub. For this, I’ve setup a basic workflow that looks for pulls to be completed into the main branch, then executing the workflow to ship off the data to the Chronicle tenant.

Here is my yaml file from my GitHub workflow

name: Deploy Chronicle Rules from Pulls

on:

pull_request:

types: [closed]

jobs:

build-and-execute:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Get environment built

run: echo ${{ secrets.MAIN_ENV }} | base64 --decode > .env

- name: Build the Python script

run: python -m rule_cli --update-remote-rulesThis runs every time a pull request is completed (meaning a fully verified rule change). This will push the content into the Chronicle instance.

You’ll see here I have a secrets.MAIN_ENVin there, for this to work you need to take your entire .env file contents and turn it into base64, then paste it as a secret into the platform. You can achieve this by running cat .env | base64 -w 0 > env.txt then take the contents of env.txt and put them into your GitHub secrets. This way you’re not storing your .env file in your repository, as mentioned many times.

Then we can verify this worked by checking that the new adfind rule has been applied in Chronicle as seen below.

GitHub Actions — Scheduled Push

Another thing I want to accomplish is to push on a schedule (nightly or whenever your team may not be publishing new content, perhaps set a window where no new Pull Requests can be submitted). The purpose of this is to overwrite any changes done within the Chronicle UI, as nobody should be making changes to a rule without going through the approved process.

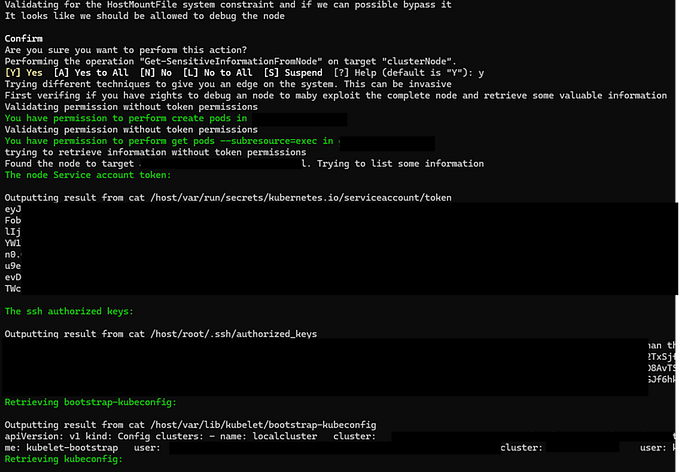

Let’s say a threat actor (or red team member) gets into your rules and breaks them so they ignore their attacks

With a job running nightly (or as frequently as you may want to) you could help mitigate this; this is also an extreme situation, more than likely you’d just have a content creator making changes to code in the platform.

With this, we can see the rule was identified to be different from the local github repo > Chronicle and it was overwritten to fix it.

Here is the YAML for this (note: the crontab time is in UTC, so make sure you set that properly).

name: Deploy Chronicle Rules (Scheduled)

on:

schedule:

# Run at 12:15AM EST nightly

- cron: '15 17 * * *'

jobs:

build-and-execute:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Get environment built

run: echo ${{ secrets.MAIN_ENV }} | base64 --decode > .env

- name: Build the Python script

run: python -m rule_cli --update-remote-rulesWhat about IDX?

As far as IDX goes, it’s a solid platform to develop in (given it’s mainly a re-skinned VSCode), and the AI can be helpful at times, but it has a very hard time understanding the difference between Yara and Yara-L. Even if you specify it to write a starter rule on Yara-L it often gives it to you in Yara.

You can take code from your current rule and ask it to modify it some way, or add additional paths, etc. but as far as starting from scratch it simply falls flat currently.

As you can see in the screenshot it somewhat understands it, but it mixes the languages consistently.

Whereas, when I want to just update a line of code (I can easily just hand-write it, but wanted to test out the logic from IDX) it works better

Overall, it’s not bad, and I understand Yara-L is a far newer language so it’ll take some time for it to understand the difference, will be very interesting to see where this goes in the coming months/years.

It does do a pretty decent job of auto recommendations in your code though. As you type it’ll complete ahead for you, and I’ve often found it’ll even write proper Yara-L for those scenarios, maybe when it’s in the code itself it realizes its Yara-L instead of regular Yara.

It did help me write the workflows for GitHub though, as I had never written a basic one for python deployments. Like most of these platforms, the things it knows well it can help with really easily, but the stuff it’s not sure about it just kind of mixes up.

Wrap-up

Hopefully this was helpful in trying to setup a workflow exclusively in GitHub and testing out a new tool that is Project IDX. You can easily replace all the steps above with VSCode and run it from your local asset as well, completely up to you!

If you have any questions feel free to reach out on Twitter, GitHub, or in the comments!